| Author | Thread |

|

|

03/10/2012 12:46:51 AM · #1 |

I had a member to ask me if I could create a chart or stats that would show the difference between an image actual score and the breakdown between Commenters, Participants, and Non-Participants.

I took one challenge (February 2012 Free Study) and manually figured the difference. I'm trying to create a vba to do it auto and see how the stats change over time.

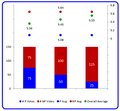

Here are the results from the test challenge above.

February 2012 Free Study Challenge

296 Entries (all Members)

1. Commenters: +1.1646

2. Participants: -0.0276

3. Non-Participants: +0.2438

Notice the linear trends.

This is what I gather from the stats of this challenge.

Collectively you may get a lower vote from participating voters, however, it seems to me the voters seems more in tune with the challenge and votes very close to the actual image's average. Whereas Non-participating voters seems to be more liberal with their votes when they do not have an entry in the challenge.

I know this is from one challenge; I would like to see if the trend is similar over the course of multiple challenges.

Thought I would post and see what others think.

Scott

Message edited by author 2012-03-10 03:08:55.

|

|

|

|

03/10/2012 04:23:02 AM · #2 |

Another interesting chart on same topic. This was taken from open challenge "Indoor Macro Shot II."

Look at the Non-Participating and Participating lines, they are almost identical!

I had to go back and make sure I didn't make a mistake - I didn't!

View full size version.

Message edited by author 2012-03-10 04:26:18.

|

|

|

|

03/10/2012 09:17:21 AM · #3 |

| Left comment (actually a bunch of questions) on the last posted image. |

|

|

|

03/10/2012 09:25:00 AM · #4 |

Originally posted by SDW:

Look at the Non-Participating and Participating lines, they are almost identical!

I had to go back and make sure I didn't make a mistake - I didn't! |

That's bizarre. I only found on spot in a quick look that wasn't a mirror image.

|

|

|

|

03/10/2012 10:13:59 AM · #5 |

Originally posted by glad2badad:

Sorry, but I'm not really understanding the various values and what they mean. For example, the blue linear line starts below the score line but finishes over, yet the comment on the chart says participants voted on average below the actual score. Looks like the participants voted higher based on where the line ends up on the right. ???

[quote=glad2badad]How is the line starting point on the left determined? |

All lines start [left side] with the first place entry and end's with the last place entry [right side].

Originally posted by glad2badad:

How is the line ending point on the right determined? |

That is the last place image.

Originally posted by glad2badad:

The score line is a constant; the end result of the challenge for that entry, yes? |

Since this chart is a deviation of the actual score, all entries are "0", so the score line will be level.

Originally posted by glad2badad:

The thin jagged lines (participant / non-participant) represent individual votes cast and their over / under the score, correct? |

Yes. These lines represent the participating and non-participating averages of each entry [image], as can be found in the challenge results.

Originally posted by glad2badad:

How can you plot individual votes except for what's shown in the end results (ex: 2 votes of 1, 4 2's, 4 3's, .... 6 8's, 3 9's, etc...)? |

I can't plot individual votes per image, just the average per image as listed in the challenge results.

EXAMPLE: Lets take the 1st and last place images of this challenge.

Fist place image:

1st place with an average vote of 6.8208

Average Vote (Participants): 6.7250 (-0.0958 below the actual average)

Average Vote (Non-Participants): 6.9030 (+0.0822 above the actual average)

Last place image:

172nd place with an average vote of 2.7765

Average Vote (Participants): 2.9520 (+0.1755 above the actual average)

Average Vote (Non-Participants): 2.6210(-0.1555 below the actual average)

|

|

|

|

03/10/2012 10:17:59 AM · #6 |

| Ah geez...makes total sense now. Sorry. I had a single image in mind with votes for that image rather than all entries in one challenge. Very cool! |

|

|

|

03/10/2012 10:22:27 AM · #7 |

It's like participants are voting the better images more harshly. Can you figure out the overall average votes for participants and non-participants?

I wonder if participants just vote everything lower, and it's more reflected in the graph for the higher scoring images. Would that logic be correct?

(It looks like for any statement that is true of participants, its opposite must be true of non-participants. Is that also correct?)

edit for clarity

Message edited by author 2012-03-10 10:23:24. |

|

|

|

03/10/2012 10:32:39 AM · #8 |

Almost looks like strategic voting, or unintentional bias, by participants. The "better" the image, better being defined as competitive, the more harshly participants voted it. On the other end of the scale, it almost looks like generous votes to keep the individuals average vote up, to avoid culling. But then that is just suspicious old me, and a feeling about the voting that I have had for years.

It is also one reason why I do not vote on the challenges I enter. If it is unconscious bias, I'd rather not contribute to that.

|

|

|

|

03/10/2012 10:57:04 AM · #9 |

Here is another chart of the same challenge. But this time the chart is represented in actual scores with deviation between Participants / Non-Participants.

Larger image

Message edited by author 2012-03-10 10:57:26.

|

|

|

|

03/10/2012 11:02:50 AM · #10 |

| So no paranoia after all. |

|

|

|

03/10/2012 11:15:20 AM · #11 |

Originally posted by adigitalromance:

It's like participants are voting the better images more harshly. Can you figure out the overall average votes for participants and non-participants? |

Do you mean per entry or by challenge?

Originally posted by adigitalromance:

I wonder if participants just vote everything lower, and it's more reflected in the graph for the higher scoring images. Would that logic be correct? |

In my opinion, I feel participants do tend to vote lower on higher quality images. I can not say it's intentionally or non-intentionally.

Originally posted by adigitalromance:

(It looks like for any statement that is true of participants, its opposite must be true of non-participants. Is that also correct?) |

To a degree. It is more prevalent in open challenges than member challenges.

Originally posted by adigitalromance:

edit for clarity |

|

|

|

|

03/10/2012 11:47:47 AM · #12 |

Originally posted by SDW:

Originally posted by adigitalromance:

It's like participants are voting the better images more harshly. Can you figure out the overall average votes for participants and non-participants? |

Do you mean per entry or by challenge? |

Per Challenge. |

|

|

|

03/10/2012 11:55:56 AM · #13 |

Originally posted by adigitalromance:

Originally posted by SDW:

Originally posted by adigitalromance:

It's like participants are voting the better images more harshly. Can you figure out the overall average votes for participants and non-participants? |

Do you mean per entry or by challenge? |

Per Challenge. |

I will see if I can remember the formula to extract votes per entry (N-P / P votes). I'm getting old so my memory slows. :) Then I can get the overall amount of votes for each and their average.

Message edited by author 2012-03-10 11:56:54.

|

|

|

|

03/10/2012 03:32:30 PM · #14 |

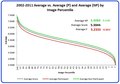

Here�s data from a larger sample. The first two charts are based on the roughly 21,000 images submitted in 2011. I�ve plotted the average score, average score participant and average score non-participant against the percentile ranking of the images. I did this because of the different number of submissions challenge to challenge:

This chart shows just the delta from average score for average participant and average non-participant by percentile:

I�m always doubtful of the practical impact in the difference of participants and non-participants scores (or in trolls) but it is interesting to see that the delta between non-participants and the average score is relatively flat but for participants the delta is significantly greater in the higher percentile images. I still think we have to be careful in assigning any causes or motives for the difference though.

As I�ve mentioned before, another factor for consideration is that participants usually make up a smaller percentage of the total number of voters in any challenge. The fact that the overall average is closer to the average non-participants is a good indicator that the number of non-participant voters is higher. The following chart demonstrates this effect:

The bars at the bottom of the chart show the number of votes from each category (P vs. NP). Note that the total remains at 150 but the percentage of participants votes drops as you move to the right. The top of the chart shows the average of the votes. The first sequence shows the overall average is equidistant from the average participants and average non-participants. As you move to the right you can see the average participants and average non-participants remain constant but the overall average draws closer to the average non-participants. As the number of votes drops in a population the average is more susceptible to impact from a single vote so the average participants may be impacted more by extreme votes. Would you put more weight in an average of 25 votes or an average of 125 votes?

Message edited by author 2012-03-12 20:58:47. |

|

|

|

03/10/2012 03:48:51 PM · #15 |

I have been doing this manually by copy and pasting the scores. Do you have a method of extracting the data automatically such as an Excel macro/vba?

I commented on each of your charts. Very nice information.

Scott

|

|

|

|

03/10/2012 03:59:06 PM · #16 |

| No matter the reason for the difference, thank goodness more participants don't vote or my average would be even lower. :-) |

|

|

|

03/10/2012 08:47:22 PM · #17 |

When you look at an even larger sample, 266,277 images submitted between 2002-2011, the effect seen in the 2011 only data is significantly minimized but not imperceptible.

|

|

|

|

03/10/2012 08:55:45 PM · #18 |

Originally posted by DJWoodward:

As I�ve mentioned before, another factor for consideration is that participants usually make up a smaller percentage of the total number of voters in any challenge. |

Are you sure of this? I've always been certain (had faith?) that most of the people who voted in any challenge were participants. |

|

|

|

03/10/2012 09:09:01 PM · #19 |

Originally posted by GeneralE:

Originally posted by DJWoodward:

As I�ve mentioned before, another factor for consideration is that participants usually make up a smaller percentage of the total number of voters in any challenge. |

Are you sure of this? I've always been certain (had faith?) that most of the people who voted in any challenge were participants. |

I'm very sure of the theory. The group with the average closest to the overall image average is almost certainly the larger group. Unfortunately we don't get the numbers in the stats to prove it. I think you,  Kirbic and I had this discussion in another thread previously :-) Kirbic and I had this discussion in another thread previously :-)

Thread Link

look near the middle of the first page

Here's the key post from  Kirbic Kirbic

The names have been changed to protect the innocent... in a recent challenge, there were 102 entries. For the top-scoring entry in that challenge, there were either 48 or 49 participant votes out of 218 total, and the remaining 169 or 170 votes were from non-participants. I increased the number of "entries" in my model, but the relative sizes of the voter pools should not depend strongly on the number of entries.

In fact, the pool of non-participants is *much* larger than the pool of participants, so if the probability of a non-participant casting votes is anywhere close to that of a participant, the number of participant votes will always be much lower. We are not specifically given the number of participant and non-participant votes, but it is possible in most cases to "back out" these numbers with some degree of accuracy.

Message edited by author 2012-03-10 21:13:22. |

|

|

|

03/10/2012 09:46:08 PM · #20 |

Since we know how many votes an image received and we know the image score, is there a formula [with accuracy] that could tell us how many votes were Non-P and P votes? I have been racking my brain today because I should know this. After all the math courses I have taken. I guess I'm getting old and my sensor (brain) needs cleaning! :P

edit to add.

Message edited by author 2012-03-10 21:46:45.

|

|

|

|

03/10/2012 09:47:47 PM · #21 |

| If you say so ... I'm just quite surprised. Note that recently the number of votes/image seems to me to be smaller. |

|

|

|

03/10/2012 10:07:10 PM · #22 |

| I take that a sizeable group of participants vote down the best images as a fact, not a theory. Especially that the number of votes per image is much lower these days. |

|

|

|

03/10/2012 11:53:16 PM · #23 |

Originally posted by MargaretN:

I take that a sizeable group of participants vote down the best images as a fact, not a theory. Especially that the number of votes per image is much lower these days. |

Sorry I just don't buy this as fact. Where is the data to prove that it�s a sizeable group of participants? If the group was sizeable I think it would show in the image histograms and we just don't see that. I'd expect to see more bimodal distributions on the best images if there was such a large group of participants doing this.

The data shows a difference between P and NP averages but it doesn't give us any insight as to why the difference exists and there could be many reasons other than strategic voting.

|

|

|

|

03/10/2012 11:57:49 PM · #24 |

Originally posted by SDW:

Since we know how many votes an image received and we know the image score, is there a formula [with accuracy] that could tell us how many votes were Non-P and P votes? I have been racking my brain today because I should know this. After all the math courses I have taken. I guess I'm getting old and my sensor (brain) needs cleaning! :P

edit to add. |

Kirbic indicates that the you could "back-out" this info with some accuracy in the linked thread above. Kirbic indicates that the you could "back-out" this info with some accuracy in the linked thread above. |

|

Home -

Challenges -

Community -

League -

Photos -

Cameras -

Lenses -

Learn -

Help -

Terms of Use -

Privacy -

Top ^

DPChallenge, and website content and design, Copyright © 2001-2025 Challenging Technologies, LLC.

All digital photo copyrights belong to the photographers and may not be used without permission.

Current Server Time: 11/10/2025 08:28:09 PM EST.