| Author | Thread |

|

|

02/09/2013 01:19:04 PM · #76 |

Originally posted by DJWoodward:

Could anybody than has a DPC account give that image a 10? |

I think some people award a 10 to whatever they consider the "best" image in the challenge pool, but not necessarily because it is an indescribably superlative example of the photographic art.

Otherwise I agree, and note that if people spent as much time commenting as they do trying to figure out why some photos score what they do, we'd have a lot fewer threads complaining about a lack of comments ... ;-) |

|

|

|

02/09/2013 02:10:59 PM · #77 |

A few more thoughts on this…

Originally posted by mikeee:

As DPCers I think we work on 3 simultaneous different levels.

We are all (well, most of us) quite nasty and the scores given by challenge participants is lower than non-participants. This underhand voting-down of the competition is exaggerated by the blind voting system DPC has. If I wanted to I could vote down any images I thought were competition to my image but this would have little overall effect on the images score, but collectively, we all do the same. There seems to be an element of looking for flaws in other people' photos if they are in competition with our own perfect image; it seems bizarre to give high scores to images that are going to beat yours through your own actions.

|

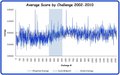

I just don’t believe this happens on a large scale. Look at the chart below. It shows a phenomenon that doesn’t seem to be recognized by the people that believe top images are under attack by low voters...

-The data is the average of the top 25 positions of the first 32 challenges of 2012 (I haven’t scrubbed any data since then or I would use more).

-Notice that the increase in score from position 25 to position 8 is almost linear.

-From position 7 to 1 the scores curve upward from the linear line.

To me this indicates that the top finishers actually increasingly stand out away from the crowd after voting. If the best images were under constant attack by collective low voting this wouldn’t happen. If anything, the top finishers are getting a bounce from people who vote relatively high on their favorite images not relatively low on their competition. If low voters are attacking, somebody else is offsetting their efforts. It just isn’t the problem that some would believe it to be.

Originally posted by mikeee:

Therefore, scores don't really matter. Some of the best scores on DPC were in the early days with poor equipment taken by enthusiastic amateurs because it seemed a fun idea, and the individual scores were so much higher than now.

|

Another fallacy; after an initial DPC honeymoon of high scores they settled into an average that is less than today

I do agree that participants vote somewhat lower than non-participants but nobody has been able to offer any evidence what the cause of this is. It may not be a nefarious reason, they may just be more discriminating.

|

|

|

|

02/09/2013 02:24:55 PM · #78 |

Originally posted by DJWoodward:

-The data is the average of the top 25 positions of the first 32 challenges of 2012 (I haven’t scrubbed any data since then or I would use more).

-Notice that the increase in score from position 25 to position 8 is almost linear.

-From position 7 to 1 the scores curve upward from the linear line.

To me this indicates that the top finishers actually increasingly stand out away from the crowd after voting. If the best images were under constant attack by collective low voting this wouldn’t happen. If anything, the top finishers are getting a bounce from people who vote relatively high on their favorite images not relatively low on their competition. If low voters are attacking, somebody else is offsetting their efforts. It just isn’t the problem that some would believe it to be.

|

Considering the varying topics covered the regularity of those scores is pretty amazing — the only statistical anomaly might be how closely the actual results follow the predicted line ... |

|

|

|

02/09/2013 02:38:51 PM · #79 |

| To be clear, the predicted line is based on the lower values only so their following of the line is expected. I did this to demonstrate that the top finishers take a departure from what would otherwise be a very consistent slope. A trend line of all of the date would look different but the top 3 images would still depart sharply above the line. |

|

|

|

02/09/2013 04:46:51 PM · #80 |

lets just agree that DPC is a stacked deck for the commercial/stock pros. fine art gets shafted. big budget wins. thats just the way it is. sure it would be nice to have "divisions" or "leagues" for art vs. commecial, for genres like journalism and sports. but that would be a pain to administer. oh well.

Originally posted by mikeee:

As DPCers I think we work on 3 simultaneous different levels.

1. We want acceptance of our peers and strive to produce something that will make others think we are good photographers. This drives our desire to improve and provides a useful excuse for poor entries, the 'I'm just here to learn' excuse. My current entry is poor. In fact, it's very poor. Hovering around 5.0x is probably quite a good score for an image which relied on 2 people coordinating a shutter and a hand-held, hand-fired 25 year old flash (I taped a safety pin across the contacts to make a switch!). But without a challenge I wouldn't have invested lots of time in my image (far too much time TBH) and I wouldn't have 'improved' by learning fundamental lessons about lighting, balancing natural and flash lighting, working withing white balance settings etc. I learned more from this than image than any of my other entries so I genuinely don't care about the score, and it has also taught me that a decent flash and remote triggers would have produced a much better result, more consistently with significantly less effort. I could have taken an easier image from a car window, processed it to death and scored higher than my gargantuan effort in my pathetic indoor studio, but I genuinely wanted to learn, and now I have, I'm going to use my skills and newly ordered flash kit to kick butt in the near future!

2. We are also competitors and really want to beat our peers (which is why we all enter these challenges). The skills of professional photographers with professional kit and years of experience as well as seriously talented amateurs with professional kit and years of experience means that the highest places are usually reserved for a small elite with the occasional 'lucky break' from somebody outside the elite. To ribbon you generally need a photo that 'conforms', or at least upsets the fewest people.

3. We are all (well, most of us) quite nasty and the scores given by challenge participants is lower than non-participants. This underhand voting-down of the competition is exaggerated by the blind voting system DPC has. If I wanted to I could vote down any images I thought were competition to my image but this would have little overall effect on the images score, but collectively, we all do the same. There seems to be an element of looking for flaws in other people' photos if they are in competition with our own perfect image; it seems bizarre to give high scores to images that are going to beat yours through your own actions. Therefore, scores don't really matter. Some of the best scores on DPC were in the early days with poor equipment taken by enthusiastic amateurs because it seemed a fun idea, and the individual scores were so much higher than now.

Example...

In the Road Signs challenge the scores ranged from 6.9291 to 3.7333 (ignoring Venser's thrust for brown) meaning that only a third of the scoring range was used and the difference between placings was decided by a few high or low votes in a few cases. I scored a 6th place which I was really really pleased with, but I don't think that a score of 6.25xx would reflect a silimar placing in any other competition. If you asked an average photographer what score they thought the photo deserved, and they couldn't hide behind anonymous voting, I think they'd give it a higher score than 6.25. It got 3 3s and 11 4s! How can anybody than has a DPC account give that image a 3? But the same scoring rules apply to all images, so it evens out. My current image deserves a lot of 3s, just to show I'm objective about my efforts ;)

I also compete in cycling competitions which are determined by a simple scoring system where the first rider across the line wins; objective scoring without dispute. Use whatever tactics you want to, but the winner wins in open competition. Anticipating the 'What about Lance?' retort, that doesn't happen at local cycling races because the rewards are so low and the costs of doing it would be so great.

Anyway, I just wanted to get some thoughts down which some people might find interesting, some may dispute and some may ignore. |

|

|

|

|

02/09/2013 04:53:28 PM · #81 |

forgot to include the obvious when it comes to #3 nastiness ... add the politics aspect and we get the coward scores against the recent obama image. 8 1's - that is shameful and discredits all of us. perhaps we all should agree to "abstain" if we see jingoism that makes us want to puke or war toys glorified or people of color we hate or an ideology we dont agree with. either that or line up those 8 cowards and have them tell us why that image scored a 1 in their estimation. right.

|

|

|

|

02/09/2013 05:16:48 PM · #82 |

Originally posted by GeneralE:

I think some people award a 10 to whatever they consider the "best" image in the challenge pool, but not necessarily because it is an indescribably superlative example of the photographic art. |

Exactly. In every challenge, I put at least one image in every vote block regardless of how good the worst image is or how bad the best image is. |

|

|

|

02/09/2013 05:22:13 PM · #83 |

Originally posted by DJWoodward:

|

What data did you use for these? Was it the median or average data?

What does the weighted average figure relate to?

Message edited by author 2013-02-09 17:28:53. |

|

|

|

02/09/2013 05:25:04 PM · #84 |

Originally posted by DJWoodward:

It just isn’t the problem that some would believe it to be. |

You mean the sky isn't falling. What? You have the numbers to back it up.

I would have thought with all the trolls lurking in the sewers and Monday voters, or is it Tuesday voters people hate this week, averages would have been way down compared to yesteryear.

Message edited by author 2013-02-09 17:25:18. |

|

|

|

02/09/2013 05:28:50 PM · #85 |

Originally posted by mikeee:

What data did you use for these? Was it the median data?

What does the weighted average figure relate to? |

I'm going to assume he just grabbed the average score of the challenge readily found on the challenge history pages and plotted them against the challenge number. Scale midpoint is the average one would expect assuming a normal distribution around 5.5. The grand average looks to be the average of the averages of the challenges used in his plots. He's showing that average scores have increased significantly since the genesis of DPC. |

|

|

|

02/09/2013 05:30:43 PM · #86 |

Originally posted by GeneralE:

I think some people award a 10 to whatever they consider the "best" image in the challenge pool, but not necessarily because it is an indescribably superlative example of the photographic art. |

So are you saying voters give only one 10 score per challenge? I don't do that. I'll vote each individual picture on if I like it or not. I might only give one 10 or I could give ten 10's. I don't compare other pictures to each other. |

|

|

|

02/09/2013 05:33:31 PM · #87 |

Originally posted by GeneralE:

Originally posted by DJWoodward:

Could anybody than has a DPC account give that image a 10? |

I think some people award a 10 to whatever they consider the "best" image in the challenge pool, but not necessarily because it is an indescribably superlative example of the photographic art.

Otherwise I agree, and note that if people spent as much time commenting as they do trying to figure out why some photos score what they do, we'd have a lot fewer threads complaining about a lack of comments ... ;-) |

By the way, I hope my sarcasm wasn't missed. I have no problem with any image receiving a 10 and any strategy for scoring is OK with me. The numbers tend to show that all of the strategies balance out. The OP used the phrase “How can anybody that has a DPC account give that image a 3?” It was just a play on his words. :-)

|

|

|

|

02/09/2013 05:35:53 PM · #88 |

Originally posted by Marc923:

Originally posted by GeneralE:

I think some people award a 10 to whatever they consider the "best" image in the challenge pool, but not necessarily because it is an indescribably superlative example of the photographic art. |

So are you saying voters give only one 10 score per challenge? I don't do that. I'll vote each individual picture on if I like it or not. I might only give one 10 or I could give ten 10's. I don't compare other pictures to each other. |

I totally compare pictures to each other. I vote on a curve, with one or two images per challenge getting 1's and 10's, a few more getting 2's and 9's, and about 80% getting 5's and 6's. I also try to keep my average score per challenge somewhere near 5.5, which often means going back after I vote, and bumping up a few images that I was on the fence about.

So, to each his own. |

|

|

|

02/09/2013 05:47:16 PM · #89 |

Originally posted by Venser:

Originally posted by mikeee:

What data did you use for these? Was it the median data?

What does the weighted average figure relate to? |

I'm going to assume he just grabbed the average score of the challenge readily found on the challenge history pages and plotted them against the challenge number. Scale midpoint is the average one would expect assuming a normal distribution around 5.5. The grand average looks to be the average of the averages of the challenges used in his plots. He's showing that average scores have increased significantly since the genesis of DPC. |

Not quite...

I scrubbed the average score for every image in every challenge between 2002 and 2010 (Actually through early 2012 when I stopped pulling data). The plotted data is the average of all of the image averages in each challenge. It could have been done as you suggested but I wanted all of the individual image data to play with. So the grand average is the weighted average of all of the images in the time period.

On the weighted average, When I first started these stats I wanted to be sensitive to "averaging averages", i.e. a simple average of the average of an image with 300 votes and an image with only 100 votes isn't completely accurate. Here’s the concept:

Link

Some people who are better statisticians than I say that this wasn't necessary. I can tell you that the results of the weighted average and the average average were not that different. |

|

|

|

02/09/2013 05:51:34 PM · #90 |

Originally posted by DJWoodward:

The numbers tend to show that all of the strategies balance out. |

As they should, it's the central limit theorem. |

|

|

|

02/09/2013 05:52:46 PM · #91 |

Originally posted by Venser:

Originally posted by DJWoodward:

It just isn’t the problem that some would believe it to be. |

You mean the sky isn't falling. What? You have the numbers to back it up.

I would have thought with all the trolls lurking in the sewers and Monday voters, or is it Tuesday voters people hate this week, averages would have been way down compared to yesteryear. |

LOL,

I think that the "Great Troll Extermination" of 2006 was the reason for the improved scores

|

|

|

|

02/09/2013 05:54:47 PM · #92 |

Originally posted by Venser:

Originally posted by DJWoodward:

The numbers tend to show that all of the strategies balance out. |

As they should, it's the central limit theorem. |

Exactly, the central limit theorem is our friend. Vive la différence |

|

|

|

02/09/2013 05:59:53 PM · #93 |

Originally posted by Marc923:

So are you saying voters give only one 10 score per challenge? I don't do that. I'll vote each individual picture on if I like it or not. I might only give one 10 or I could give ten 10's. I don't compare other pictures to each other. |

Originally posted by Ann:

I totally compare pictures to each other. I vote on a curve, with one or two images per challenge getting 1's and 10's, a few more getting 2's and 9's, and about 80% getting 5's and 6's. I also try to keep my average score per challenge somewhere near 5.5, which often means going back after I vote, and bumping up a few images that I was on the fence about. So, to each his own. |

Both, perfectly acceptable approaches in my humble opinion. :-)

|

|

|

|

02/09/2013 09:08:22 PM · #94 |

Originally posted by Marc923:

Originally posted by GeneralE:

I think some people award a 10 to whatever they consider the "best" image in the challenge pool, but not necessarily because it is an indescribably superlative example of the photographic art. |

So are you saying voters give only one 10 score per challenge? I don't do that. I'll vote each individual picture on if I like it or not. I might only give one 10 or I could give ten 10's. I don't compare other pictures to each other. |

No, I'm saying (some) people will give at least one 10 in every challenge, even if none of the images is a "great" picture.

Originally posted by DJWoodward:

Both, perfectly acceptable approaches in my humble opinion. :-) |

Ditto -- as long as you apply your voting strategy to the entire challenge.

BTW: I guess I did (partially) miss the sarcasm earlier ... :-( |

|

Home -

Challenges -

Community -

League -

Photos -

Cameras -

Lenses -

Learn -

Prints! -

Help -

Terms of Use -

Privacy -

Top ^

DPChallenge, and website content and design, Copyright © 2001-2024 Challenging Technologies, LLC.

All digital photo copyrights belong to the photographers and may not be used without permission.

Current Server Time: 04/16/2024 05:33:41 AM EDT.